Forecast reconciliation

What if our forecasts should follow linear constraints?

Imagine you work in a retail company and you are responsible for forecasting total sales in the following weeks. The company is spread through the country, and the forecast must be made for every city and state.

However, when the national and regional sales teams meet, your forecasts aren't consistent. "How can we sum state-level sales and find a number larger than the national one?". Which forecasts should they consider to base their decisions on?

Forecast reconciliation methods are simple and effective solutions to that problem. When you have an hierarchy of time-series that respect some linear constraints (such as the sum of state sales must be equal to national sales), you may forecast each level independently and reconciliate them.

Let's suppose our company is based on Europe and has two branches: one in France and another in Italy, and we were asked for a seven-days-ahead estimation.

The intuition

Consider this dataset containing two years of sales of a company:

The total sales in Europe is the sum of French and Italian sales, and they should follow a linear constraint defined by

Note that it can be regarded as a 2d plane in a three-dimensional space: to be considered coherent, our observations and forecasts must lie on that plane.

Applying well-known statistical forecasting methods such as ARIMA and ETS won't necessarily generate estimates that adhere to that constraint. The figure below illustrates how forecasts of an ARIMA model can diverge from it.

It seems that we can't rely on those methods. Forecasting each level independently is not enough. How can we guarantee that we'll give good quality and coherent forecasts?

Forecasting coherently

The literature provides two main approaches to forecast coherently: the single-level approach and reconciliation methods (Athanasopoulos et al., 2020).

Single-level approaches

Single-level approaches choose one level of hierarchy to produce estimates and then expand the forecasts to other levels.

The bottom-up requires forecasts for the most disaggregated levels of the hierarchy. Let be the total number of series and the number of bottom-level series. In our sales example, and . The coherent forecasts are given by:

where is the h-step-ahead base forecasts for all series, our h-step-ahead forecasts for bottom-level ones. is the matrix that captures the hierarchy constraints. In the case of our sales forecasting problem, it's equal to:

and

These levels, however, are usually the most volatile and with lower signal-to-noise ratio. Another approach is the top-down, which forecasts the top-level time-series and then disaggregate it to build forecasts for lower levels in the hierarchy:

The proportions may be estimated from historical observations (Gross & Sohl, 1990). This approach, on the other hand, is not capable of capturing particularities of the other levels in the hierarchy. Single-level approaches have the advantage of being simpler, but they don't take advantage of the information in other levels. Reconciliation methods solve this issue by using correlations of all series to get a coherent estimate.

Forecast reconciliation

Remember how our observations lay on a plane and incoherent forecasts lay outside of it in Figure 2. Let be this coherent subspace, a m-dimensional space for which the linear constraints hold. We can say that our h-step-ahead forecasts will be coherent if . If we get our base forecasts and project them onto the coherent subspace, we'll get coherent forecasts.

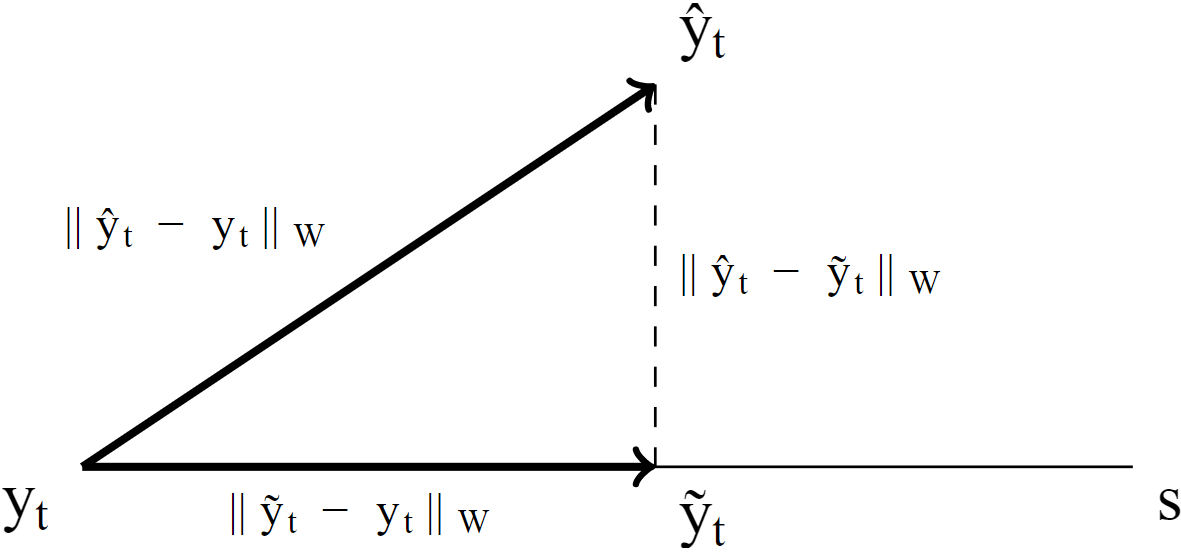

The work of Panagiotelis et al., 2021 provided this geometric view of forecast reconciliation. They reframed the forecast reconciliation problem as "how should our projection matrix be so that our error metric is reduced?" If you want to ensure all your forecasts will be better after reconciliation, you may enjoy the properties of orthogonal projection. This procedure, however, considers that all errors in the hierarchy should be treated equally, which is not always the case. The forecast of total sales, for example, might be more important than sales in a specific country. Or maybe France plays a critic role in the business.

In such cases, you may go for oblique projections. They work as if the axes with less relevance were stretched before proceeding with an orthogonal projection. There's also a special kind of oblique projection that minimizes the expected error, using an estimate of the error covariance matrix Wickramasuriya et al., 2019. They called it MinT reconciliation.

Orthogonal projection

The orthogonal projection has an interesting property of being the solution in closest to the base forecasts . In other words, it solves the optimization problem:

where is the reconciled forecast. Hereafter, we'll use tilde to represent forecasts after reconciliation, and a hat to represent our base forecasts. The projection matrix can be built directly from the matrix which captures the hierarchical structure.

where maps to , producing new bottom-level forecasts.

We can easily reconcile our forecasts:

If one of the requirements is to change the base forecasts as least as possible, orthogonal projection is the way to go. However, it considers that all errors in the hierarchy are equally important.

Oblique projection

When you want to avoid some errors in the hierarchy more than others, oblique projection is a good choice. It can be seen as an orthogonal projection in a space with another metric , where W is a positive definite matrix. In that case, the projection matrix is:

Note that is equivalent to orthogonal projection in euclidean space. Pythagoras theorem guarantees that will always be closer to the true than the base forecast in terms of :

If, for example, we apply an oblique projection to our sales forecasts with , we obtain the following result:

MinT reconciliation

While those solutions ensure better estimates, they aren't optimal in terms of expected error. They suppose a distance metric and find the coherent forecasts that change the base forecasts as least as possible. The MinT reconciliation approach, on the other hand, minimizes the expected distance between the reconciled forecasts and the true values, even if that means changing considerably the base forecasts. The optimization problem it tries to solve is

(1)

where

where is the error covariance matrix of h-steps-ahead forecasts. Equation 1 says that, by minimizing the trace of the h-steps-ahead covariance matrix of , we can reduce the expected error after reconciliation. The optimal can be obtained by the oblique projection of :

where

Still, we have to estimate , which is unknown. The literature provides two choices Athanasopoulos et al., 2020:

, a naive unconstrained estimator for covariance matrix also known as sample covariance matrix. While this method is simple and works well for small hierarchies, it does not provide reliable results when the number of series grows. The reconciliation method with this estimator is called MinT(sample).

An improved estimator of the covariance matrix proposed by Schäfer & Strimmer, 2005 and Ledoit & Wolf, 2004, which has one of funniest titles I've ever seen. The shrinkage estimator is and the shrinkage intensity parameter can be computed from the estimated correlation matrix :

This estimator safeguards the positive-definite property of the covariance matrix, and works better when then number of observations is not considerably larger than the number of series in the hierarchy. The reconciliation method with this estimator is called MinT(shrink).

Conclusion

This post was meant to be a brief introduction to hierarchical forecasting. Some advances in reconciliation methods have been made in recent years, and we should expect more to come.

The implemention of those methods is fairly simple, and the benefits are sure. The fable package in R implements them, but it should be straightforward to code from scratch in other languages.

Feel free to contact me if you need some help.

Appendix

Proof of orthogonal projection matrix

The optimization problem is:

where .

So

Proof of oblique projection matrix

The optimization problem is

where .

So

Proof of MinT

The optimization problem is

But

because

Thus

The optimization problem can be written in terms of minimization of the trace:

Following the proof in the appendix of Wickramasuriya et al., 2019, the optimal G is:

References

- Athanasopoulos, G., Gamakumara, P., Panagiotelis, A., Hyndman, R. J., & Affan, M. (2020). Hierarchical Forecasting. Advanced Studies in Theoretical and Applied Econometrics, 52(February), 689–719. https://doi.org/10.1007/978-3-030-31150-6_21

- Panagiotelis, A., Athanasopoulos, G., Gamakumara, P., & Hyndman, R. J. (2021). Forecast reconciliation: A geometric view with new insights on bias correction. International Journal of Forecasting, 37(1), 343–359. https://doi.org/10.1016/j.ijforecast.2020.06.004

- Gross, C. W., & Sohl, J. E. (1990). Disaggregation methods to expedite product line forecasting. Journal of Forecasting, 9(3), 233–254. https://doi.org/10.1002/for.3980090304

- Wickramasuriya, S. L., Athanasopoulos, G., & Hyndman, R. J. (2019). Optimal Forecast Reconciliation for Hierarchical and Grouped Time Series Through Trace Minimization. Journal of the American Statistical Association, 114(526), 804–819. https://doi.org/10.1080/01621459.2018.1448825

- Banerjee, S., & Roy, A. (2014). Linear algebra and matrix analysis for statistics. Crc Press.

- Schäfer, J., & Strimmer, K. (2005). A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomics. Statistical Applications in Genetics and Molecular Biology, 4(1).

- Ledoit, O., & Wolf, M. (2004). Honey, I shrunk the sample covariance matrix. Journal of Portfolio Management, 30(4), 1–22. https://doi.org/10.3905/jpm.2004.110